Winter driving conditions can make sharing the road more dangerous for people on bikes. Snow, ice, reduced daylight, and foggy windshields create situations where drivers may have difficulty seeing or reacting to bicyclists.In addition, we know that many motorists drive distracted without their full attention on the road. Here’s why these situations are particularly risky in the colder months and how to navigate them safely:

Take the Lane When Necessary: In winter, roads often become narrower due to leaf piles, snow piles, icy shoulders, or debris pushed aside by plows. This leaves less room for vehicles to pass cyclists safely. By taking the lane:

- You increase your visibility: Riding in the center of the lane ensures drivers see you earlier and have time to adjust their speed and path.

- You discourage unsafe passing: Drivers are more likely to wait for a safe opportunity to overtake, rather than attempting a tight squeeze that could lead to an accident.

- You avoid hazards: Staying out of the gutter helps you steer clear of leaves, icy patches, puddles, or slush, which can destabilize your bicycle.

- Use Lights and Reflectors: Proper lighting isn’t just a smart safety precaution—it’s also required by law in North Carolina. When riding at night, you must have a white front light visible from at least 300 feet and a red rear reflector or light visible from at least 200 feet. (§ 20-129(e))

Be Extra Vigilant at Crossings: Intersections become especially hazardous in winter for several reasons:

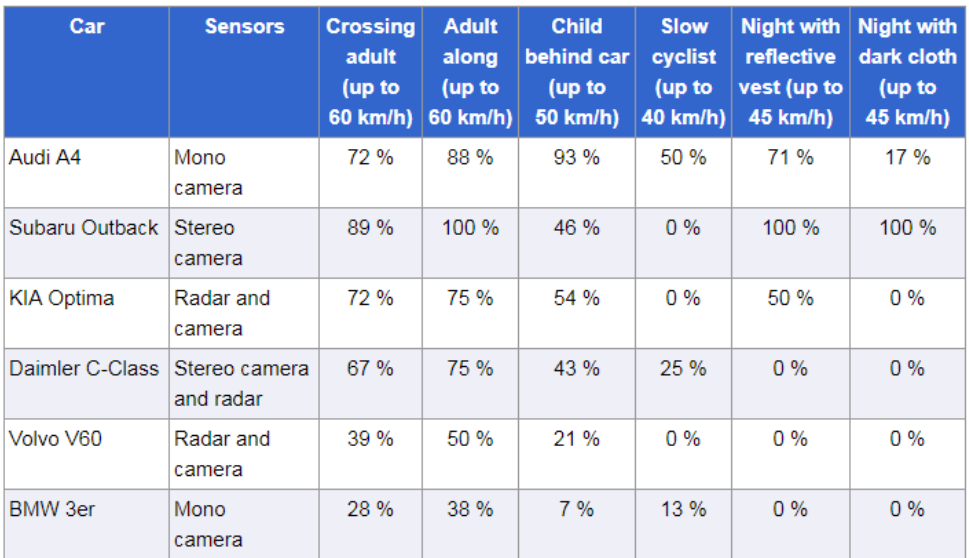

- Drivers have reduced visibility: Foggy or poorly cleared windshields, frost, and snow-covered mirrors can obscure their view, particularly of people on bikes who are smaller and harder to spot.

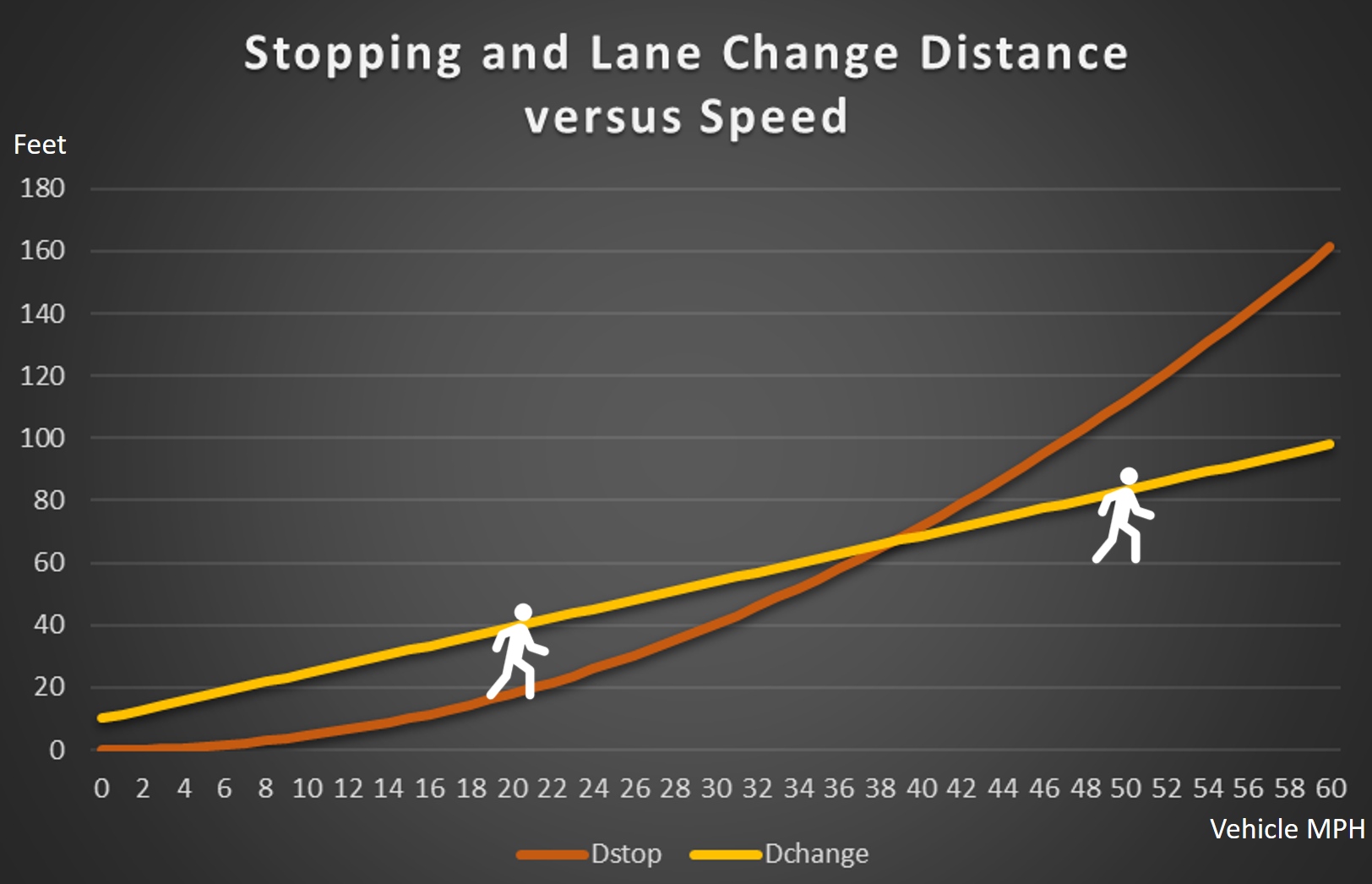

- Braking distances increase: Slippery roads mean that even if a driver sees you, they may not be able to stop in time.

- Turning vehicles are unpredictable: Drivers focused on navigating icy turns and may fail to notice bicyclists in or approaching the intersection.

By staying alert and riding proactively, you can make your winter rides safer and more enjoyable. Got a tip for staying safe as a cyclist?

We’d love to hear your advice! Share your ideas with us at outreach@bikewalknc.org, and we might feature them in a future newsletter or post.